Amidst the burgeoning realm of technology investment, a new wave of apprehension, dubbed ‘AI Blowback’, is permeating the minds of ESG fund managers. These investors, who once gravitated towards the tech sector for its promise of low carbon footprints and lucrative returns, now find themselves wrestling with the unforeseen complexities and risks associated with artificial intelligence advancements.

Marcel Stotzel, a seasoned portfolio strategist at Fidelity International based in London, has voiced his concerns, foreseeing a potential ‘AI Blowback’ scenario. He envisions a situation where an unforeseen event could precipitate a substantial downturn in the market. Stotzel’s apprehension hinges on the unpredictability of AI, particularly in scenarios like autonomous fighter jets, where the stakes of malfunction are exceptionally high. Fidelity, along with other fund managers, is actively engaging with developers of such AI technologies, advocating for safety measures like an emergency ‘kill switch’ to prevent any AI systems from deviating catastrophically from their intended course.

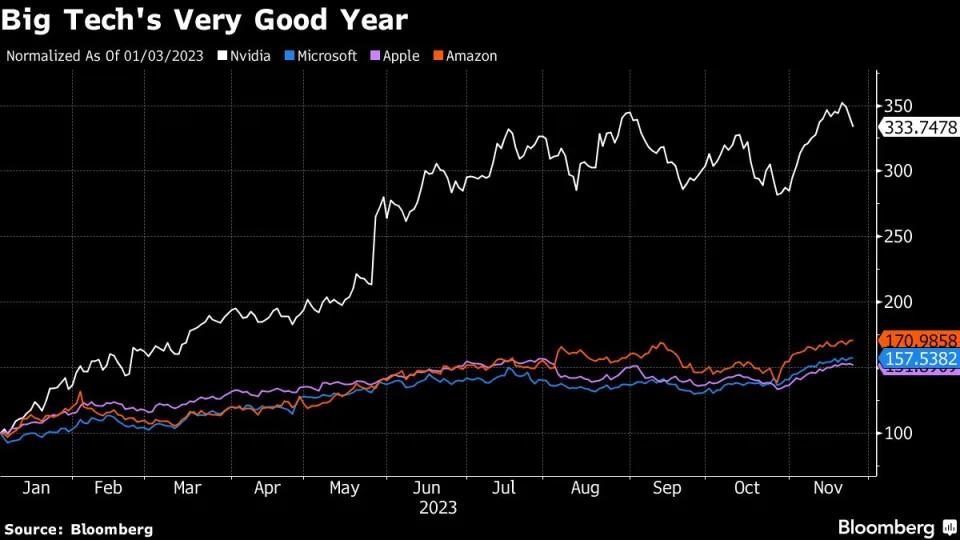

The ESG investment sector, having significantly embraced technology, finds itself particularly vulnerable to these AI-related risks. Data from Bloomberg Intelligence reveals that funds with explicit environmental, social, and governance mandates hold a greater proportion of tech assets than any other sector. The largest ESG exchange-traded fund globally is predominantly tech-centric, with major holdings in tech giants such as Apple Inc., Microsoft Corp., Amazon.com Inc., and Nvidia Corp.

These tech behemoths are at the vanguard of AI innovation. Recent events have brought into sharp focus the industry’s internal conflicts regarding the pace and direction of AI development. OpenAI, known for its groundbreaking ChatGPT, made headlines with the abrupt dismissal and subsequent reinstatement of its CEO, Sam Altman. This incident sparked widespread speculation and highlighted the internal debates over the balance between ambition and the societal implications of AI.

Apple Inc. has adopted a more cautious approach to AI, with CEO Tim Cook emphasizing the need to address various issues associated with the technology. Other major players, including Microsoft, Amazon, Alphabet Inc., and Meta Platforms Inc., have committed to implementing voluntary safeguards to minimize AI’s potential for misuse and bias.

Stotzel notes that the risks associated with AI are not confined to startups but are also significantly present in the world’s leading tech corporations. This sentiment is echoed by other major investors. The New York City Employees’ Retirement System, a substantial U.S. public pension plan, is closely monitoring AI usage within its portfolio companies. Generation Investment Management, co-founded by former U.S. Vice President Al Gore, is intensifying its research into generative AI and maintaining regular dialogues with invested companies about both the risks and opportunities presented by AI.

Norway’s colossal $1.4 trillion sovereign wealth fund has urged companies to seriously consider the grave and uncharted dangers posed by AI. The monumental growth of OpenAI’s ChatGPT, becoming the fastest-growing internet application in history, is a testament to the rapid expansion and influence of AI. This growth has seen tech giants involved in similar technologies experience a surge in their stock values.

However, the lack of regulatory frameworks and historical data regarding AI’s long-term performance is a source of concern for experts like Crystal Geng, an ESG analyst at BNP Paribas Asset Management in Hong Kong. Geng points out the difficulty in quantifying the social implications of AI, such as job displacement.

Jonas Kron, the chief advocacy officer at Trillium Asset Management in Boston, is pushing for greater transparency in AI development. Trillium has even filed a shareholder resolution with Alphabet Inc., Google’s parent company, seeking detailed information about its AI algorithms. Kron emphasizes the governance risks associated with AI and notes the urgent need for regulatory intervention to prevent discrimination and misuse of personal data.

The number of AI-related incidents and controversies has skyrocketed since 2012, highlighting the urgency of addressing these issues. Investors in major tech companies are demanding increased transparency over AI algorithms, and labor unions are calling for guidelines to protect against AI-induced harms, such as worker discrimination, election disinformation, and automation-driven layoffs.

Bloomberg Intelligence predicts that the EU may soon regulate AI applications deemed ‘high risk’, such as social media recommendation algorithms and employment management tools. These systems would require thorough assessments and registration before being launched in the EU market, underscoring the growing global concern over the unchecked expansion of AI technology.